TIL: Rust fallthrough, How Let's Encrypt Works, s1

Fallthrough

It’s a very interesting piece of work. I learned how to convert any fallthrough, which is common in switch-case-like construct in C, (or, more generally, how to convert any DAG of C goto) to Rust. As the author didn’t provide any benchmark (and hinted the readers to benchmark it themselves), I decided to take a look. The benchmark is here.

While this trick is fun, benchmark results show that it performs similarly to the if version with or without inlining:

break_version time: [33.657 ns 33.782 ns 33.900 ns]

if_version time: [31.681 ns 31.828 ns 31.987 ns]

break_version_inline time: [29.289 ns 29.407 ns 29.516 ns]

if_version_inline time: [29.025 ns 29.135 ns 29.227 ns]

break_version_noblackbox

time: [4.2127 ns 4.2280 ns 4.2448 ns]

if_version_noblackbox time: [4.0954 ns 4.1053 ns 4.1147 ns]

break_version_inline_noblackbox

time: [650.55 ps 652.54 ps 654.39 ps]

if_version_inline_noblackbox

time: [477.84 ps 479.68 ps 481.54 ps]

One interesting observation in the above benchmarks that may encourage you to continue using the if version is that the if version performs better than the jump table version when constant propagation is possible.

Out of curiosity, I take a look at the generated assembly for non-inline version (select ASM in the top left) under release build. Surprisingly, they are exactly the same.

So for now I guess it’s just a simple fancy trick that you can just play around with. But maybe in the future we’ll have an optimization pass for this pattern.

How Let’s Encrypt Works

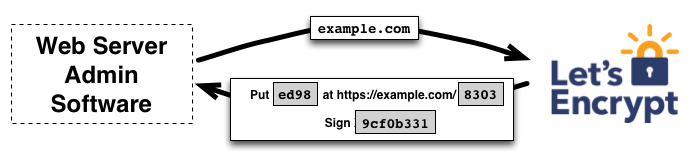

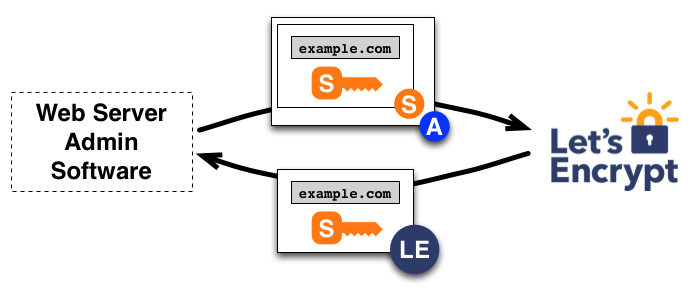

The following two diagrams summarize how Let’s Encrypt works.

First, the domain owner needs to verify the ownership of the domain and signs a nonce with some sk1 that the owner has. After successful verification, CA will trust the public key pk1 associated with sk1.

To obtain a certificate, an agent can construct a Certificate Signing Request (CSR) to ask CA to issue a certificate for a domain with public key pair pk2. CSR includes a signature by sk2 and needs to be signed by sk (e.g., sk1) associated with the pk (e.g., pk1) of this domain (so that CA knows the agent is authorized for this domain).

A question came to mind as I drafted this blog. Why do we need a signature by sk2? This is addressed by PKCS #10: Certification Request Syntax Specification Version 1.7, Section 3, Note 2.

Note 2 - The signature on the certification request prevents an entity from requesting a certificate with another party’s public key. Such an attack would give the entity the minor ability to pretend to be the originator of any message signed by the other party. This attack is significant only if the entity does not know the message being signed and the signed part of the message does not identify the signer. The entity would still not be able to decrypt messages intended for the other party, of course.

s1

After following the project for a long time, I finally decided to take a look at the paper.

Budget forcing is indeed interesting based on the evaluation of the s1. But after taking a look at the latest s1.1 result, I notice that the model achieves the same pass@1 even without test-time scaling on some benchmark. I think there are a couple other questions worth exploring

- Why does budget forcing only improve performance in some cases?

- Is budget forcing really useful when fine-tuning a model based on high-quality inference data (as in the case of s1.1)?

- What happens if we use budget forcing in reasoning model?

In addition, the authors compared budget forcing with other prompting techniques. When s1 was explicitly prompted to think for short or long periods of time, it performed worse than when the question was asked directly (without budget forcing). Why this is the case is worth exploring. More broadly, I think it is unclear right now how prompting might affect the performance of reasoning models.

With respect to generalizability, it was noted that the base model used to fine-tune s1 has already established some reasoning capability (when using budget forcing). Thus, a natural question is whether this technique can be replicated to other models of the same size that does not establish the reasoning capability?

- A recent paper about cognitive behaviors that enable self-improving reasoning might shed some lights on this question.